Databricks Consulting Services for Enterprise Data and AI Modernization

Reduce Snowflake Data Management Cost by 30% in 3 Months Using Hybrid Databricks + Snowflake — Without Losing Features

1. Executive Overview

Databricks consulting services | Cloud data platforms are now a critical enabler for every enterprises. Yet, CFOs and CTOs are finding that their Snowflake bills often grow faster than expected — primarily due to compute credits spent on ELT/ETL workloads.

Our proposition is simple:

- Cut Snowflake compute costs by ~30% in 3 months by shifting heavy transformation workloads to Databricks Jobs/Photon.

- Retain Snowflake’s strengths — governed SQL, secure data sharing, BI performance.

- Enable future-proof growth with open formats, elastic scaling, and ML-ready data pipelines.

2. The Cost Challenge with Snowflake

For many enterprises, Snowflake’s pricing appears simple at first glance, but the reality is more complex — costs can quickly spiral if workloads aren’t carefully managed. Snowflake charges in two “currencies”: Databricks consulting services

- Compute Credits – Snowflake’s unit for measuring compute power. Virtual Warehouses and serverless features (Snowpipe, Tasks, Search Optimization, Query Acceleration) all consume credits, billed per second with a 1-minute minimum.

à Example: Snowpipe Streaming costs 1 compute credit/hour + $0.01 per client instance, while Search Optimization costs 10 credits/hour.

- Storage (TB/month) – Billed on the average compressed volume of data stored (including permanent and transient tables).

àExample: ~$23 per compressed TB/month in standard US regions.

While storage is predictable, most customers find that 70–80% of their spend comes from compute credits, with only 20–30% from storage. (Source: Cloudchipr)

Why This Becomes a Cost Problem

The issue isn’t understanding Snowflake’s model — it’s how workloads behave within it. Compute-heavy tasks like ETL pipelines often run longer, overlap with BI queries, or require oversized warehouses, making costs balloon in ways storage never does. On top of that, serverless features and egress fees quietly add recurring expenses that are easy to overlook.

Key Challenges for CTOs & CFOs

- TL and BI workloads compete on the same warehouses, inflating credit consumption.

- Idle or oversized clusters create hidden waste (credits burn even when underutilized).

- Serverless features add silent recurring charges.

- Data egress (out of Snowflake) is billed per GB and grows with external sharing.

Note: Reserved capacity discounts, regional price differences, and promotional credits can impact costs and should be factored into budgeting.

Snowflake charges in compute credits (for warehouses and serverless features) and TB/month (for storage) while Databricks, by contrast, bills in DBUs (Databricks Units) — a per-second unit tied to workload type and the underlying cloud Virtual Machines.

This difference is crucial:

- Snowflake warehouses burn credits continuously, even if idle or oversized, and serverless features like Snowpipe or Search Optimization add recurring charges.

- Databricks compute spin up only when needed, process the workload, then shut down — meaning you pay only for the actual work, not for idle time.

What is Databricks Compute? Databricks Consulting services | Nallas

Databricks compute refers to the clusters of virtual machines (VMs) that execute your workloads — jobs, SQL queries, or ML models. The cost equation is simple:

Databricks Cost = DBUs consumed × DBU rate + Cloud VM cost

Each workload type consumes DBUs differently, which gives flexibility but also requires understanding to avoid cost inefficiency.

Databricks Compute: Types & How to Choose

Databricks offers workload-specific compute types, making it easier to align cost with purpose.

Compute Type | Best For | DBU Cost Range | Key Notes |

Jobs Compute / Jobs Photon | ETL/ELT pipelines, batch jobs, production workloads | ~$0.15/DBU (AWS Premium) | Lowest cost. Photon improves throughput per DBU → cheaper pipelines. |

All-Purpose Compute | Interactive notebooks, collaboration, data exploration | ~$0.40–$0.55/DBU | 3–4× more expensive than Jobs. Use only for exploration, not production. |

SQL Warehouses | BI dashboards, analytics queries | Varies by edition; Serverless is pricier | “Classic” or Serverless (no cluster mgmt). Serverless trades control for convenience. |

Model Serving | Deploying ML models as APIs (real-time inference) | DBUs + per-request cost | For ML/AI workloads; auto-scales serverless endpoints. |

Pricing Insights — Why Databricks Helps You in Winning Better

- Snowflake: A medium warehouse running ETL for just 3 hours/day can consume 100–200+ credits/month → $200–$600. Add serverless features like Snowpipe (1 credit/hr) or Search Optimization (10 credits/hr) and hidden recurring charges pile up.

- Databricks Jobs: Equivalent ETL pipeline (~80 DBUs) = $12. Even if mistakenly run on All-Purpose (~$0.55/DBU), cost = $44 — still far below Snowflake.

Why This Matters

- Lower unit price: Databricks Jobs DBUs are substantially cheaper than Snowflake credits for the same ETL workloads.

- More predictable bills: With auto-termination and job scheduling, there’s less risk of runaway compute costs.

- Better for ML/AI: Databricks naturally supports Python/ML pipelines — workloads that would otherwise burn expensive Snowflake credits inefficiently.

- Open formats = flexibility: Databricks stores data in Delta/Parquet, avoiding lock-in and giving you more control over long-term storage costs.

Note: While this cost advantage is compelling, workloads with complex SQL transformations or compliance restrictions may face migration challenges, and full evaluation should precede any changes.

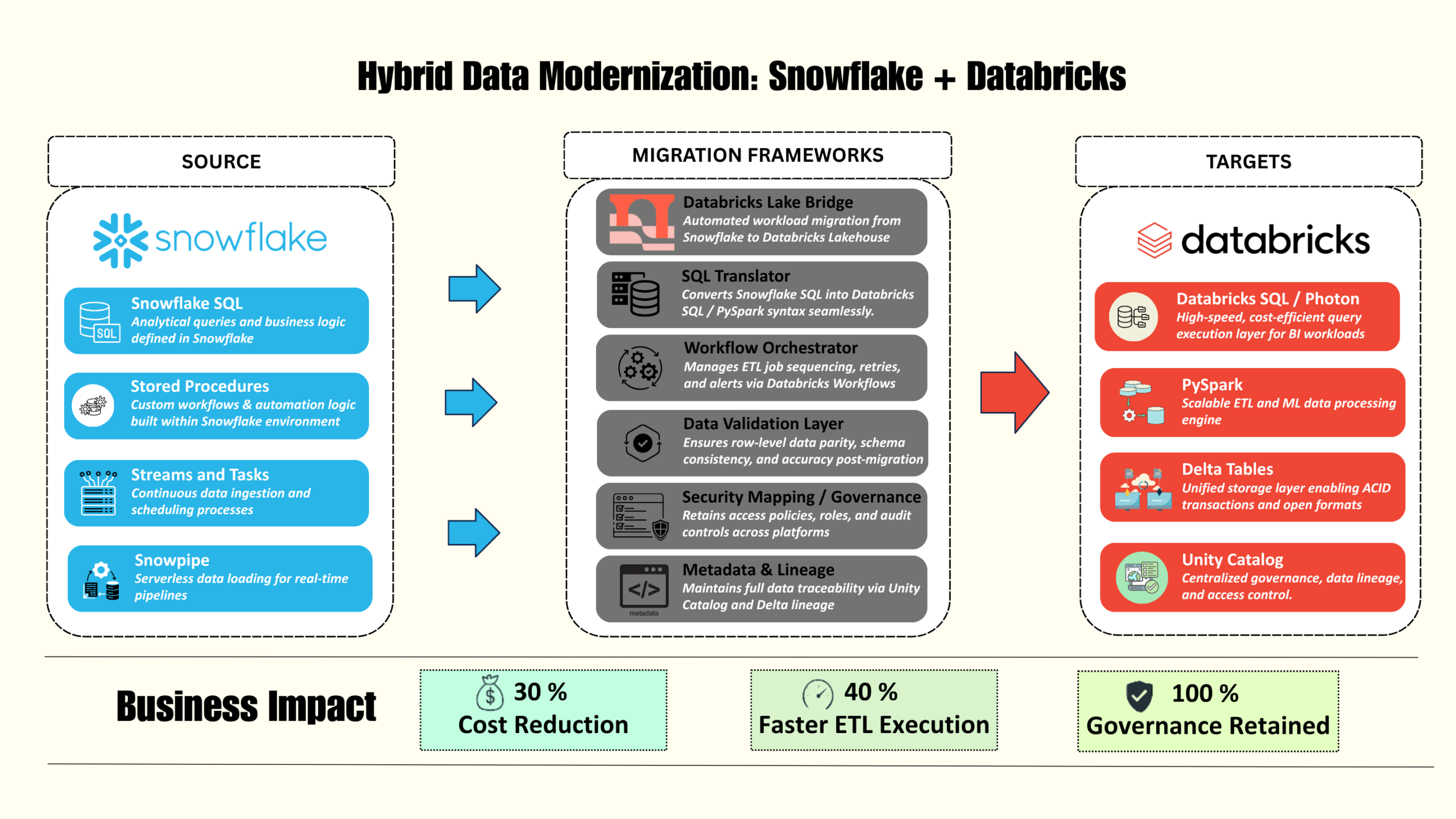

4. Hybrid Architecture — Right Tool for the Right Workload

The most effective model isn’t choosing between Snowflake or Databricks — it’s combining them, with each platform doing what it does best.

- Data Ingest & Transformation (Bronze/Silver Layers): Run pipelines on Databricks Jobs/Photon, optimized for ETL and large-scale data prep at lower cost.

- Curated Semantic Models (Gold Layer): Store and serve in Snowflake, ensuring governed, secure, and SQL-friendly access for business users.

- Governance & Security: Both platforms enforce strong governance — with lineage, role-based access, and compliance controls intact across the hybrid model.

- Consumption & Analytics: Analysts, BI dashboards, and business applications continue to query Snowflake seamlessly, without disruption.

Why This Hybrid Works

- Performance for BI: Snowflake warehouses stay dedicated to reporting and analytics, unaffected by heavy ETL workloads.

- Efficiency for Engineering: Data engineers gain flexibility and lower costs by leveraging Databricks for transformations.

- Financial Impact: Compute-heavy work shifts to the cheaper engine (Databricks), while storage and analytics remain in Snowflake, reducing total cost of ownership (TCO).

It is important to acknowledge that managing a hybrid environment requires integration efforts, cross-team coordination, and possible overhead related to maintaining two systems, connectors, and monitoring.

5. Financial Impact — A Sample Model

To illustrate the savings potential, let’s model a mid-market customer running ETL pipelines and BI workloads on Snowflake today.

Baseline: All-in Snowflake

- Compute: 4,500 credits/month × $2.50/credit = $11,250

- Storage: 50 TB × $23/TB = $1,150

- Total = $12,400/month

In this setup, ETL pipelines consume oversized warehouses, running for hours alongside BI queries — driving most of the compute spend.

Optimized Hybrid: Snowflake + Databricks

- Snowflake Compute (BI only): Reduced to ~2,100 credits = $5,250

- Databricks Jobs (ETL): 12,000 DBU-hours × $0.18/DBU = $2,160

- Storage: 50 TB (unchanged) = $1,150

- Total = $8,560/month

By offloading ETL pipelines to Databricks Jobs/Photon, Snowflake credits are cut in half, and Databricks executes pipelines at a fraction of the cost.

The Impact

- Baseline (Snowflake-only): $12,400

- Hybrid (Snowflake + Databricks): $8,560

- Net Savings: $3,840/month (~31% reduction)

Over a year, this equals $46,000+ in savings for a mid-sized deployment — without losing any Snowflake functionality for BI users.

Actual savings depend on workload specifics, regional costs, reserved pricing contracts, and execution efficiency. Using pricing calculators and testing with your own workloads is essential.

Tools to Validate Your Numbers

Every organization’s rate and region differ. To test this model with your own workloads, use:

6. From Roadmap to Results — Why Hybrid Wins

Moving to a Databricks + Snowflake hybrid is not a risky overhaul but a fast, structured path to savings:

- Weeks 1–4: Baseline usage, tag workloads, and migrate top ETL pipelines to Databricks Jobs/Photon.

- Weeks 5–8: Right-size Snowflake BI warehouses, enable auto-suspend, and optimize credits.

- Weeks 9–12: Strengthen governance and deploy a FinOps dashboard for ongoing visibility.

Through this approach, you cut idle credit waste, separate ETL from BI, and unlock efficiency — without changing how analysts use Snowflake.

What stays the same: BI workflows, governance, and security.

What improves: Faster queries, lower ETL costs, and open data formats for future flexibility.

At Nallas, we will develop playbooks, financial models, and migration frameworks to help your enterprises achieve ~30% cost savings in just 3 months — while keeping analytics uninterrupted.

The takeaway: The challenge with Snowflake isn’t storage, it’s runaway compute. The solution isn’t to replace Snowflake, but to complement it with Databricks. Together, they deliver the best of both worlds — cost efficiency, performance, and future readiness.